Service with a Robot Smile

Service with a Robot Smile

Engineers have designed robots that can collaborate with humans. But to work effectively in close quarters, the machines need to behave in ways that people understand as non-threatening.

Everything looks normal at my local Giant grocery store. Customers are wheeling their carts up and down the aisle, plucking items off the shelves, and checking things off their lists.

In one aisle, though, there is something besides focused shoppers. A tall, slender machine rolls slowly along, monitoring the shelves and the floor, looking for things that are out of place. When it comes to the end of an aisle, it encounters the normal traffic tangle caused by a few shoppers politely trying to move past one another. The machine stops and waits for traffic to clear, then proceeds around the end of the aisle and down the next one.

The machine even has a name: Marty. Giant installed Marty and identical robots in all its stores to monitor spills and scan the shelves to see if they are stocked correctly.

Robots in industrial settings are pretty common now. Nuclear power plants, for example, are inspected almost entirely by robot. Planes and spacecraft were developed to navigate using automation and computer control. For a generation or two, robots have increased in numbers on factory floors, tightly controlled environments where workers are generally hyper-aware of dangerous machinery and take pains to avoid running into them. Such robots don’t need to be warm and fuzzy and generally they are not.

More and more, however, new types of intelligent robots are entering cities, workplaces, and public spaces with the intent to interact with humans. Companies such as Starship Technologies have developed picnic cooler-size delivery robots, and Knightscope offers a robotic security guard. Streets full of cars, sidewalks teeming with people, and grocery stores swarming with shoppers are much more complex, random, and unpredictable than industrial settings.

A lot of that randomness is due to the humans with whom the robots are sharing the space. “Out in the wild” is what Tim Rowland, CEO of Badger Technologies in Nicholasville, Ky., which manufacturers the Marty series, calls the new robot operating environment.

“Badger robots are around consumers on a regular basis,” he said. “There’s been a lot of interesting reactions and responses to these robots.”

Engineers like Rowland are working to develop robots that work well around people, that are essentially “friendly” rather than threatening.

Laura Major, chief technology officer at Motional, an autonomous car company in Cambridge, Mass., and the coauthor of the recent book, What to Expect When You’re Expecting Robots, said, “As robots become a part of our everyday world, they’re going to become social entities, and they need to behave.”

The so-called everyday world has decidedly different zones of activity and different expectations for the people in them. Our homes ought to be our castles. At the workplace, on the other hand, we are expected to conform to the rules set by employers. Because simple robots were very rule-based and needed human “coworkers” to accommodate their limitations, it was easier to introduce robotic arms to factories rather than kitchens.

Everyday life includes a third space, too: Public areas such as roads, sidewalks, and grocery store aisles that are not as constrained as factory floors but still have expectations of regular behavior. Engineers developing self-driving vehicles, which are essentially autonomous robots with passengers, have spent years trying to get these machines to perfectly navigate this third space.

Deep Dive on This Topic: Empathetic Robots

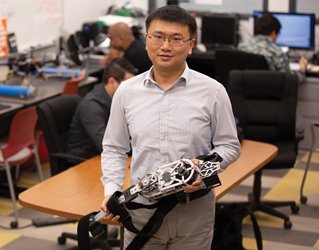

“Autonomous vehicles and drones are great examples of how humans can work with robots,” said Wenlong Zhang, an assistant professor in the Polytechnic School in the Ira A. Fulton Schools of Engineering at Arizona State University in Tempe.

Robotic taxis, such as those deployed by Motional in Las Vegas, are enabling researchers to gain real-world experience to learn how to get robots to interact with humans in socially comprehensible ways. The robo-taxis have given over 100,000 rides on the Las Vegas Strip to paying passengers, who can simply order an autonomous car via Lyft. One thing engineers have realized is that robots need to make quick decisions based on a limited amount of data.

“When you interact with another vehicle, the total amount of time is probably less than a couple of seconds. How much data can you collect?” Zhang asked. “A manufacturing robot can sit there and collect lots of data in the same setting. Autonomous vehicles create more challenges in human-robot interaction.”

It isn’t just the limited timeframe for human interactions that creates a challenge, Zhang said. He points to work at Arizona State developing wearable exoskeletons. These devices are intended to assist patients during rehab and recovery and include an arm brace that uses air pressure to assist movements and an intelligent, motorized knee brace to support stroke survivors in rehabilitating their walking ability. The combination of motorized parts and programmed intelligence makes these exoskeletons essentially soft wearable robots.

While in rehabilitative settings, the environment can be limited, the ultimate goal is for assistive exoskeletons such as these to be worn in the field by soldiers or construction workers. “The challenge we have seen is that they’re going to wear these in all types of situations,” Zhang said. “How do we program for that?

Major and her co-author Julie Shah, associate professor of aeronautics and astronautics and associate dean for social and ethical responsibilities of computing at the Massachusetts Institute of Technology in Cambridge, see other challenges in this third space. In their book, they envision a scenario of drone vehicles replacing human delivery drivers and couriers. “Soon, your front yard and our neighborhoods may be swarming with drones, which will deliver small packages, and passengers will zip across town in the air over roadway traffic,” they wrote.

Unless these robots know how to behave around the people they are trying to serve, all this activity could seem menacing.

Major has worked in autonomous systems her entire career, starting in aeronautics and astronautics, before entering the field of self-driving cars. “I saw an opportunity to help take the experience I’ve had with industrial applications and bring those lessons over to the consumer space and make robotics for consumers a faster reality,” she recalled.

One of the lessons she and Shah have learned—and which they relay in their book—is that humans react to how a robot or automated system behaves, not how it looks. Klunky, rigid ones can be friendly, while others sculpted from soft materials to look like people can seem threatening. A key technology is artificial intelligence and machine learning, as these allow the robot to learn many different things so it can react to the infinite number of situations it will encounter.

“You can’t script that into the robot,” Major said. “It’s important that we have scalable techniques such as AI and machine learning that allow us to learn these behaviors and be able to set up our robots so they can follow social norms.”

In Depth on Industrial Robots: Robotics Automation Explores New Frontiers

To build a robust machine learning platform, the system must draw from a vast library of robot-human interactions. That’s where robots such as Badger Technology’s Marty come in. Rowland, an engineer who spent decades in product development before he got into sales, said Badger Technologies began deploying Marty in 2019, installing about 540 robots in Giant Food Stores, Martin’s, and Stop & Shop grocery stores on the East Coast. “We’ve logged almost half a billion miles of travel during very busy times,” he said. “We’re traveling autonomously safely, and we’ve had no incidents in many hours of operation in the public.”

The robots traverse store aisles looking for spills, obstacles, debris, or anything that could impose safety risks for customers and store employees. If Marty detects a problem that needs attention, it sends a message to the store’s public address system and pings a device carried by the front-end manager. Then the robot will position itself next to the hazard and turn its light and audible speaker on.

Additionally, the robots serve as mobile data collection systems to archive information and images to help improve operational efficiencies. Data gathered during the robots’ continuous store loops can address out-of-stock, shelf-layout, and price-integrity issues.

As Rowland explained, the main sensing technology Marty uses for navigation is Lidar—light detection and ranging, also known as laser scanning. Similar systems are found in self-driving cars for parking and similar functions. Several LIDAR sensors adorn the robot.

“When we first deploy one of these robots, we have a handheld version of that technology that we walk through the grocery store with to read all the structural entities,” Rowland said. “It gives us a three-dimensional point cloud that we translate into a map the robot then stores in its control system.”

The robots also employ cameras pointed at various angles to look at the shelves and down the aisle to provide three-dimensional depth information. This array of sensors monitors a safety zone about a four-foot radius from the robot; whenever anything moves in that zone, the machine will take appropriate action such as slowing or stopping.

In their book, Major and Shah see the kind of teamwork between Marty and human store workers as a precursor to a deeper partnership. “Some of our most stubborn societal problems could be better addressed by the kind of collaboration we envision. The applications are vast,” they wrote.

Major said most of the work on the book was done prior to the start of the pandemic, but the past 12 months have underscored the importance of these issues. “In this post-COVID-19, there’s even more openness for adopting this technology to keep people out of dangerous circumstances, whether that’s delivering food to somebody or sanitizing an office,” she said.

Some of the answers may come from systems more familiar to engineers: industrial robots used in manufacturing.

“What are the hard lessons we learned in aerospace and factory robots that we can now transfer and use those lessons to help inform the design of consumer robots?” Major asked. One key, she said, was making sure that robots in consumer settings have the flexibility to react to changing environments. “We need robots to be directable so you can communicate with it or change its behavior,” Major said.

More on Service Robots: How Can Robots Improve the Building Labor Shortage?

It’s a challenge that roboticists are still grappling with, even in constrained factory settings. “The problem we have when we apply these robotic manipulators is, we don’t really know how to let them team up with humans,” Zhang said. “What roles should humans take? What roles should robots take?”

As robots like Marty prove more and more successful, they will create a new set of challenges, both technical and social. “It will take years if not decades for us too see the best practices with these robots,” Zhang said. “It’s like cell phones. The next question is, how do we bring down cost and make them easy to maintain? There are a lot of aspects.”

Someday, though, we could see robots intermingling with humans as commonplace, and we’ll expect machines with names like Marty to greet us at the grocery store or deliver our food.

Tom Gibson, P.E. is a consulting mechanical engineer based in Milton, Pa. He specializes in machine design, sustainability, and recycling and publishes Progressive Engineer, an online magazine and information source.

In one aisle, though, there is something besides focused shoppers. A tall, slender machine rolls slowly along, monitoring the shelves and the floor, looking for things that are out of place. When it comes to the end of an aisle, it encounters the normal traffic tangle caused by a few shoppers politely trying to move past one another. The machine stops and waits for traffic to clear, then proceeds around the end of the aisle and down the next one.

The machine even has a name: Marty. Giant installed Marty and identical robots in all its stores to monitor spills and scan the shelves to see if they are stocked correctly.

Robots in industrial settings are pretty common now. Nuclear power plants, for example, are inspected almost entirely by robot. Planes and spacecraft were developed to navigate using automation and computer control. For a generation or two, robots have increased in numbers on factory floors, tightly controlled environments where workers are generally hyper-aware of dangerous machinery and take pains to avoid running into them. Such robots don’t need to be warm and fuzzy and generally they are not.

More and more, however, new types of intelligent robots are entering cities, workplaces, and public spaces with the intent to interact with humans. Companies such as Starship Technologies have developed picnic cooler-size delivery robots, and Knightscope offers a robotic security guard. Streets full of cars, sidewalks teeming with people, and grocery stores swarming with shoppers are much more complex, random, and unpredictable than industrial settings.

A lot of that randomness is due to the humans with whom the robots are sharing the space. “Out in the wild” is what Tim Rowland, CEO of Badger Technologies in Nicholasville, Ky., which manufacturers the Marty series, calls the new robot operating environment.

“Badger robots are around consumers on a regular basis,” he said. “There’s been a lot of interesting reactions and responses to these robots.”

Engineers like Rowland are working to develop robots that work well around people, that are essentially “friendly” rather than threatening.

Laura Major, chief technology officer at Motional, an autonomous car company in Cambridge, Mass., and the coauthor of the recent book, What to Expect When You’re Expecting Robots, said, “As robots become a part of our everyday world, they’re going to become social entities, and they need to behave.”

Working With Robots

The so-called everyday world has decidedly different zones of activity and different expectations for the people in them. Our homes ought to be our castles. At the workplace, on the other hand, we are expected to conform to the rules set by employers. Because simple robots were very rule-based and needed human “coworkers” to accommodate their limitations, it was easier to introduce robotic arms to factories rather than kitchens.

Everyday life includes a third space, too: Public areas such as roads, sidewalks, and grocery store aisles that are not as constrained as factory floors but still have expectations of regular behavior. Engineers developing self-driving vehicles, which are essentially autonomous robots with passengers, have spent years trying to get these machines to perfectly navigate this third space.

Deep Dive on This Topic: Empathetic Robots

“Autonomous vehicles and drones are great examples of how humans can work with robots,” said Wenlong Zhang, an assistant professor in the Polytechnic School in the Ira A. Fulton Schools of Engineering at Arizona State University in Tempe.

Robotic taxis, such as those deployed by Motional in Las Vegas, are enabling researchers to gain real-world experience to learn how to get robots to interact with humans in socially comprehensible ways. The robo-taxis have given over 100,000 rides on the Las Vegas Strip to paying passengers, who can simply order an autonomous car via Lyft. One thing engineers have realized is that robots need to make quick decisions based on a limited amount of data.

“When you interact with another vehicle, the total amount of time is probably less than a couple of seconds. How much data can you collect?” Zhang asked. “A manufacturing robot can sit there and collect lots of data in the same setting. Autonomous vehicles create more challenges in human-robot interaction.”

It isn’t just the limited timeframe for human interactions that creates a challenge, Zhang said. He points to work at Arizona State developing wearable exoskeletons. These devices are intended to assist patients during rehab and recovery and include an arm brace that uses air pressure to assist movements and an intelligent, motorized knee brace to support stroke survivors in rehabilitating their walking ability. The combination of motorized parts and programmed intelligence makes these exoskeletons essentially soft wearable robots.

While in rehabilitative settings, the environment can be limited, the ultimate goal is for assistive exoskeletons such as these to be worn in the field by soldiers or construction workers. “The challenge we have seen is that they’re going to wear these in all types of situations,” Zhang said. “How do we program for that?

Major and her co-author Julie Shah, associate professor of aeronautics and astronautics and associate dean for social and ethical responsibilities of computing at the Massachusetts Institute of Technology in Cambridge, see other challenges in this third space. In their book, they envision a scenario of drone vehicles replacing human delivery drivers and couriers. “Soon, your front yard and our neighborhoods may be swarming with drones, which will deliver small packages, and passengers will zip across town in the air over roadway traffic,” they wrote.

Unless these robots know how to behave around the people they are trying to serve, all this activity could seem menacing.

Scalable Techniques

Major has worked in autonomous systems her entire career, starting in aeronautics and astronautics, before entering the field of self-driving cars. “I saw an opportunity to help take the experience I’ve had with industrial applications and bring those lessons over to the consumer space and make robotics for consumers a faster reality,” she recalled.

One of the lessons she and Shah have learned—and which they relay in their book—is that humans react to how a robot or automated system behaves, not how it looks. Klunky, rigid ones can be friendly, while others sculpted from soft materials to look like people can seem threatening. A key technology is artificial intelligence and machine learning, as these allow the robot to learn many different things so it can react to the infinite number of situations it will encounter.

“You can’t script that into the robot,” Major said. “It’s important that we have scalable techniques such as AI and machine learning that allow us to learn these behaviors and be able to set up our robots so they can follow social norms.”

In Depth on Industrial Robots: Robotics Automation Explores New Frontiers

To build a robust machine learning platform, the system must draw from a vast library of robot-human interactions. That’s where robots such as Badger Technology’s Marty come in. Rowland, an engineer who spent decades in product development before he got into sales, said Badger Technologies began deploying Marty in 2019, installing about 540 robots in Giant Food Stores, Martin’s, and Stop & Shop grocery stores on the East Coast. “We’ve logged almost half a billion miles of travel during very busy times,” he said. “We’re traveling autonomously safely, and we’ve had no incidents in many hours of operation in the public.”

The robots traverse store aisles looking for spills, obstacles, debris, or anything that could impose safety risks for customers and store employees. If Marty detects a problem that needs attention, it sends a message to the store’s public address system and pings a device carried by the front-end manager. Then the robot will position itself next to the hazard and turn its light and audible speaker on.

Additionally, the robots serve as mobile data collection systems to archive information and images to help improve operational efficiencies. Data gathered during the robots’ continuous store loops can address out-of-stock, shelf-layout, and price-integrity issues.

As Rowland explained, the main sensing technology Marty uses for navigation is Lidar—light detection and ranging, also known as laser scanning. Similar systems are found in self-driving cars for parking and similar functions. Several LIDAR sensors adorn the robot.

“When we first deploy one of these robots, we have a handheld version of that technology that we walk through the grocery store with to read all the structural entities,” Rowland said. “It gives us a three-dimensional point cloud that we translate into a map the robot then stores in its control system.”

The robots also employ cameras pointed at various angles to look at the shelves and down the aisle to provide three-dimensional depth information. This array of sensors monitors a safety zone about a four-foot radius from the robot; whenever anything moves in that zone, the machine will take appropriate action such as slowing or stopping.

In their book, Major and Shah see the kind of teamwork between Marty and human store workers as a precursor to a deeper partnership. “Some of our most stubborn societal problems could be better addressed by the kind of collaboration we envision. The applications are vast,” they wrote.

Major said most of the work on the book was done prior to the start of the pandemic, but the past 12 months have underscored the importance of these issues. “In this post-COVID-19, there’s even more openness for adopting this technology to keep people out of dangerous circumstances, whether that’s delivering food to somebody or sanitizing an office,” she said.

Some of the answers may come from systems more familiar to engineers: industrial robots used in manufacturing.

“What are the hard lessons we learned in aerospace and factory robots that we can now transfer and use those lessons to help inform the design of consumer robots?” Major asked. One key, she said, was making sure that robots in consumer settings have the flexibility to react to changing environments. “We need robots to be directable so you can communicate with it or change its behavior,” Major said.

More on Service Robots: How Can Robots Improve the Building Labor Shortage?

It’s a challenge that roboticists are still grappling with, even in constrained factory settings. “The problem we have when we apply these robotic manipulators is, we don’t really know how to let them team up with humans,” Zhang said. “What roles should humans take? What roles should robots take?”

As robots like Marty prove more and more successful, they will create a new set of challenges, both technical and social. “It will take years if not decades for us too see the best practices with these robots,” Zhang said. “It’s like cell phones. The next question is, how do we bring down cost and make them easy to maintain? There are a lot of aspects.”

Someday, though, we could see robots intermingling with humans as commonplace, and we’ll expect machines with names like Marty to greet us at the grocery store or deliver our food.

Tom Gibson, P.E. is a consulting mechanical engineer based in Milton, Pa. He specializes in machine design, sustainability, and recycling and publishes Progressive Engineer, an online magazine and information source.

.png?width=854&height=480&ext=.png)